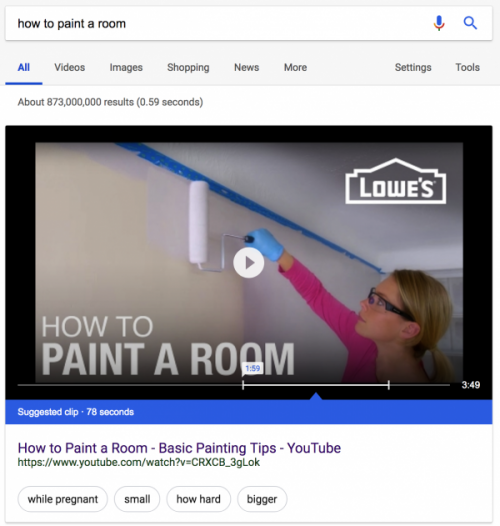

Early last year, Google released Suggested Clips as a type of Featured Snippet that appears at the top of SERPS for how-to process queries with visual intent. Unlike traditional video search results, this feature jumps to a specific point in the selected video where the answer to the search query appears.

In particular, this feature tends to highlight sequences of instructions. Extracting this sequence from a video is powerful, as it opens up access to knowledge that is not easily understood as a Knowledge Graph entry, closed fact, or simple text extraction.

How Google Selects a Suggested Clip

After reading through some Google research papers and studying these results, the process for discovering these Suggested Clips is reasonably straight-forward.

1. Identify Videos with Transcriptions (User-Provided or Automatic)

For this analysis to occur, a video must have a human-provided or automatic transcription of its audio. A transcript allows Google to parse the audio with NLP tools, just as they do with traditional text-based NLP.

YouTube has invested heavily in automatic captioning over the last few years, and it has improved significantly. The YouTube captioning team shared at VidCon two years ago that they had automatic captions on 1 billion videos, with accuracy increased by 50% and major enhancements in entity detection. These improvements laid the groundwork to more accurately support Suggested Clips.

However, automatic captioning/transcription is flawed. It can incorrectly determine words, introduce grammar errors (which interfere with NLP), miss proper punctuation, and struggle to extract voices against competing sounds.

If you have the resources, providing your own transcription can give a video a slight edge by reducing these errors.

2. Leverage Videos with Text-based Reference Documents

An essential requirement for higher precision in determining a Suggested Clip is using a separate, non-transcription reference text that can be processed using NLP and referenced against the transcript. While the analysis can be done on the transcription alone, a reference text provides a structured comparison that improves the accuracy and confidence in the selected clip.

In simple terms, a video is more likely to get a Suggested Clip if there is a corresponding text reference that follows natural language and post structure best practices. This text, which outlines the procedure and steps, can appear in a separate article or within the description of the video (YouTube description). A link is made between the video and a separate article URL by using traditional video SEO practices, such as video structured data, video sitemaps, and linking to the article from the description of a YouTube video.

3. Apply NLP to Extract Process from Text-based References

Google will apply NLP to the reference text to extract the procedure as a series of “micro-steps.”

Example Keyword: “how to delete twitter”

Suggested Clip Video (starting at 0:26): https://www.youtube.com/watch?v=Qpr3hZPnBcQ

Blog Post Linked in Description: https://techboomers.com/delete-twitter-account

Within this blog post, there is a straightforward process outlined using an ordered list of steps in the imperative mood (command or request that has an explicit verb).

“Is Twitter just too much of a noise-filled squawk box for you? Thinking of flying the coop and trying out Twitter alternative? In this article, we’re going to show you how to delete your Twitter account in five simple steps:

1. Go to www.twitter.com and log in.

2. Click on your profile picture, and then select Settings.

3. Click Account, and then click Deactivate My Account.

4. Read the warnings (or not), and then click Deactivate [your Twitter user name].

5. Enter your password, and then click Deactivate AccountNeed a little more guidance than that? Below are detailed instructions with screenshots to point you in the right direction.”

Placing these steps into a semantically-structured HTML Ordered List helps simplify the extraction, but a list using headings would also work. From here, Google can execute simple NLP to extract the micro-steps.

Process for Extracting Micro-Steps

- Identify semantic content structuring that is indicative of a process (list formatting).

- Identify words that signify procedures: “steps,” procedure,” “method,” “process” to focus the extraction.

- Identify text chunks with verbs that action on nouns/entities (especially those nouns/entities with high salience scores for the query).

- Look for a whitelisted set of action/process verbs (cook, click, enter, go, turn, push).

- Split sentences at conjunctions (for, and, nor, but, or, yet, and so), and convert those into additional micro-steps.

- Retain nouns that are a direct object of a verb, either directly or via a preposition such as “of” (“Add a cup of flour”).

- Discard steps that lack a clear verb.

With this process, Google NLP could pull out ten distinct micro-steps from the Twitter example text above.

4. Identify Process Steps in Video

The same style of NLP is applied to the transcript of the video. Each micro-step is extracted with its corresponding timestamp and a variable amount of extra time before and after that segment.

Looking at the transcription of our example video, we’ll see several issues with the transcription. It has simple errors, such as transcribing “Twitter.com” as “Twitter calm.” It also has no punctuation or complete sentences. However, if you look for action verbs, you can see the simple micro-steps that can be extracted using the NLP process outlined above.

0:25 – to start go to Twitter calm in your web browser and log into your account if you

00:32 -haven’t already in the top right corner click on your

00:37 – profile picture and select settings from the drop down menu from the menu on the

00:45 – Left select account then scroll down to the bottom of the page and click

00:51 – deactivate my account read the information associated with deactivating

00:57 – your Twitter account then click the button that says deactivate and your

01:01 – username you will be asked to re-enter

01:05 – your password to confirm that you want

01:07 – to deactivate your account type in your

01:09 – password and then click deactivate

01:11 – account and that’s it your account has

01:15 – now been deactivated and will be

This transcript has eleven micro-steps that can be extracted using simple NLP.

Google can also clarify the steps in the video transcript by looking for the nouns mentioned within the text-based reference. In simple terms, the blog post says “profile picture” in a step, so look for a verb actioning on “profile picture” in the transcript. Additionally, keyword relationships and salience scores can be used to identify alternative nouns, such as a reference article using “pasta” and the video saying “spaghetti.”

5. Connect Video Audio to Text Reference

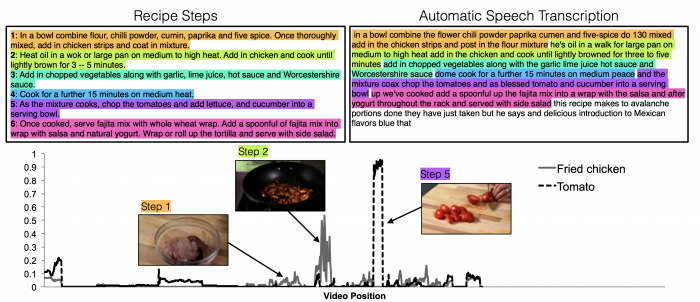

Google can now link a step in the text-based reference to a timestamp within the video. Here is an example of a text-based reference for a recipe being connected to the segments within the automatic transcription of a cooking video.

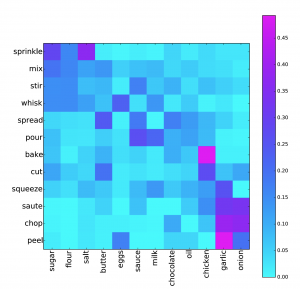

From here, confidence can be further refined by computing an affordance probability, which measures the likelihood that an object appears as a direct object of an action.

This probability allows Google to more accurately select the correct segments from the transcript bag of words. This process helps to ensure that they choose a speech segment that contains “peel” and “garlic” and not one that contains “peel” and “sugar.”

Important Note: I’ve seen this process fail on videos that have audio interruptions that put significant time gaps between the micro-steps. In one example video I researched, there was a vacuuming montage that interfered with the voice audio and then continued for about 10 seconds. On this video, Google cut the suggested clip where the vacuum noise started, even though the micro-steps continued after the montage ended. There is likely a limited tolerance period for interruptions to the process.

6. Evaluate Timestamps with Visual Analysis

While the examples in this post are simple, connecting a reference article to a video that doesn’t follow the post exactly can be messy. To improve confidence further, Google can perform visual analysis on the frames around the timestamp to determine if the noun being referenced appears within the frame, as a video typically cuts to a frame of the object around the time the speaker starts to reference it. This can be done using a process similar to Google’s Cloud Video Intelligence API, which can detect cuts or individual shots and label those shots with entities (dog, flower, car, etc.). While this can be resource intensive, Google scales down the frame rate and resolution of the video to do this more effectively.

This process allows the micro-step text to be mapped to individual shots within the video.

With all of these steps, and some timestamp padding before and after the segments, they’re able to determine where the micro-steps start, where they continue with minimal interruption, and where they end. If the confidence is high enough to string together a substantial duration of video, you end up with a Suggested Clip.

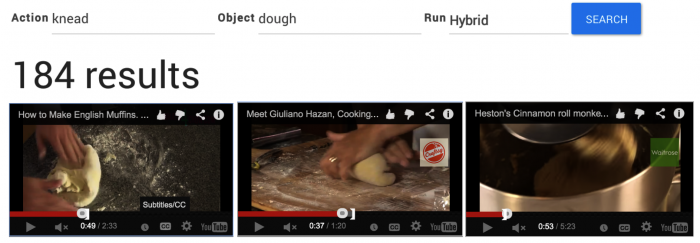

A New Type of Video Search

The immediate implications of this “search within video” style of indexing are that 1) they can power Suggested Clip style features in traditional search and 2) they can start using the voice contents of a video to determine its relevancy for a search query (which will entirely shift how YouTube SEO works). However, it also creates a whole new form of search against videos. Check out Google’s internal search engine built with this process.

This new approach can treat clips as documents, allowing users to search for videos that contain specific actions on objects, dropping them at the exact frame where that object and action appears in the video.

How to Optimize for Suggested Clips

In summary, there are some simple best practices to acquire a Suggested Clip and to make the content of your video indexable.

1. Create a Text-based Reference Text

This can be written before or after scripting and filming the video, but it should match the video closely. Writing this “article” before writing the video’s script can help sync the procedures.

- Outline the procedure in simple steps using the imperative mood, or in other words, simple action steps (“Add a cup of flour”).

- Using simple language, connect the verb to the noun in a single sentence or sentence fragment, remembering that conjunctions split steps.

- Use ordered lists or ordered headings to add semantic meaning to the text.

- Connect the text-based reference to the video by placing it in the video’s description or connecting the video to the reference URL by linking to it in the description, using video structured data, and/or video sitemaps.

- Review this guide to NLP SEO and this presentation on content formatting for NLP.

2. Optimize Audio for NLP

The video’s audio track should be easy to understand by Google’s NLP.

- Give steps in simple, imperative mood statements using common action verbs.

- Follow an order and process similar to the text-based reference.

- Use the same noun/entity name for the object of the verb, or a word that is semantically similar (pasta and spaghetti), as the one in the text-based reference.

- Eliminate distractions in the video between steps, like excessive b-roll or tangents that move away from the procedure.

- Record good quality audio (buy a microphone, and don’t use the on-camera mic).

- Ensure audio is well-optimized for auto transcription (high quality, clear audio, minimize cross-talking, minimize background sounds).

- Use cuts and jump cuts to eliminate distracting audio.

- Review automatic transcriptions to make corrections to anything that wasn’t correctly transcribed, especially action words, entities, and essential nouns (objects of the action).

- Provide your transcriptions (if resources allow or YouTube hasn’t transcribed your video).

3. Optimize Visuals for Labeling

The visuals within the frame of video should reinforce the action and object pair visually and match with the audio delivering that step.

- Have a cut where introduction audio ends and the procedure audio begins.

- Ensure the visuals in the video enforce the text of each micro-step when relevant (if you say “onion” show an onion on the screen).

- Ensure the object is not obstructed or shown in poor lighting; eliminate anything that makes it hard to see.

- Ensure the object is large enough in the frame to be identified when sized down to 224 x 224.

- Show the object for at least 0.2 seconds.

These steps won’t guarantee a Suggested Clip but should eliminate errors in the process and increase Google’s confidence in their selection of a clip.

How to Find Suggested Clip Opportunities

Now that we know how to get Suggested Clip Featured Snippets, the next step is identifying keywords and topics where we can acquire this search feature. The key here is identifying keywords with high video intent (where Google knows users have a preference for video content) that correspond with a visual procedure. Here are four simple processes for identifying these keywords.

1. Find YouTube Featured Snippets

Use a tool like SEMRush, which monitors rankings across hundreds of millions of keywords, to find where YouTube.com already has a Featured Snippet. These Featured Snippets are not always Suggested Clips, but you’ll find a significant number of Suggested Clip results using this method.

Go to SEMRush.com and search YouTube.com

- Go to Organic Research > Positions report

- Click advanced filters

- Filter for Include > SERP features > Featured snippet

- Filter for Include > Keyword > Contains > [Your Target Keyword]

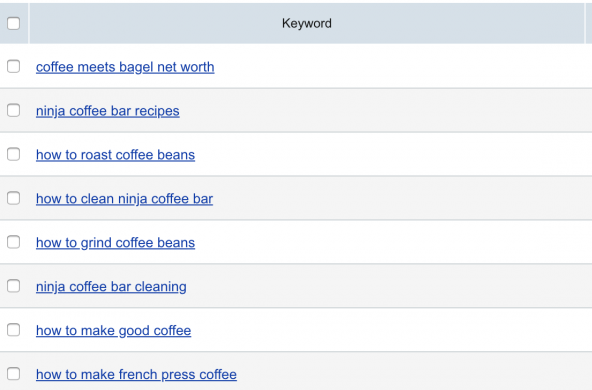

In this example, I filtered for the keyword “coffee” and the keyword “how to make french press coffee” has a Suggested Clip.

2. Find “How To” Keywords Where YouTube Ranks Well

One way to find keywords with a high video intent is to find keywords where YouTube.com ranks well. These can be filtered for process keywords by filtering for “how” in addition to your target keyword. Again, SEMRush is valuable here.

- Go to Organic Research > Positions report

- Click advanced filters

- Filter for Include > Position > Less than > 6

- Filter for Include > Keyword > Contains > [Your Target Keyword]

- Filter for Include > Keyword > Contains > “how”

If my target keyword is “tent,” this returns keywords such as:

- how to set up a tent

- how to clean a tent

- how to fold a pop up tent

3. Overlap with YouTube.com and another Domain

The one-keyword-at-a-time approach can be a bit cumbersome. To get around this, use the keyword profile of a domain and cross-reference it against the keywords of YouTube.com, using either your domain or a competitor’s domain. This can be done using SEMRush’s Keyword Gap tool and works best on domains with a robust set of articles and strong performance in organic search.

- Go to Gap Analysis > Keyword Gap

- Enter YouTube.com as the first domain and a topically-relevant reference domain as the second domain

- Click advanced filters

- Filter for Include > Keyword > Contains > “how”

- Filter for youtube.com (organic) Pos. > Less than > 6

- Filter for example.com (organic) Pos. > Less than > 6

A comparison between YouTube.com and Lowes.com returns keywords such as:

- how to remove wallpaper

- how to paint a room

- how to fix a hole in drywall

- how to build a dog house

- how to install a garbage disposal

4. SERP Feature Research via Rank Tracking Tools

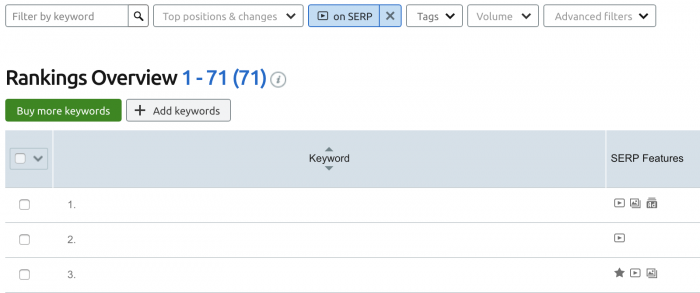

Another approach is to use rank tracking tools that give SERP feature data, and to bulk rank check a list of keywords. Tools like SEMRush and STAT both do this. Develop a list of “how to” or procedure keywords using any of your favorite methods of keyword research. Put that list of keywords into your rank checking tool to do a single check of the keywords (no need to monitor these rankings for longer than this moment-in-time check). You’ll be able to filter into all the keywords that have a video SERP feature.

In SEMRush, this can be done in Projects > Position Tracking by filtering for keywords with the Video SERP Feature.

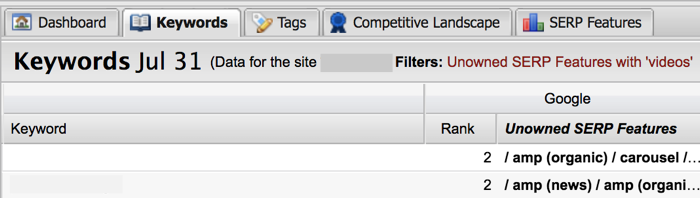

In STAT, this can be done through the SERP Feature tab by clicking through to Video SERP Featured results.

This approach allows you to identify opportunities independent of the keywords within tool databases like SEMRush.

If you have any comments or questions, hit me up on Twitter or leave a comment below.

Great information. It’s amazing how in-depth creators can go to optimize their videos. It gets overwhelming to be honest.

I know what I’ll be testing out tomorrow morning. Great article!

Now will you be creating a video targeting the term “How to Find Suggested Clip Opportunities” to go along with this article?

These are great tips for all content ideas, not just to get YouTube Thank you for posting. I’m already finding great ideas in SEMRush using your suggestions and getting inspired to dig in even deeper.

Really appreciate the insights you’re bringing around NLP and how it applies to the Google’s output on search, really helpful stuff! Really curious how provided transcripts improve how well you rank directly or indirectly. Great stuff.