Traditional on-page SEO guidance is to target a primary phrase, its near-related terms, and its longtail variants by using them in the text and placing them in strategic locations on the page (i.e., title, headings, early in content, throughout content). However, writing for Natural Language Processing, or NLP, requires some additional steps and considerations.

Managing on-page SEO for Google’s NLP capabilities requires a basic understanding of the limitations of its parser and the intelligence behind the logic. In practical terms, this is technical SEO for content understanding. Writing for NLP requires clear, structured writing and an understanding of word relationships.

Brief Introduction to NLP

The are many aspects to Natural Language Processing, but we only need a basic understanding of its core components to do our job well as SEOs. In short, NLP is the process of parsing through text, establishing relationships between words, understanding the meaning of those words, and deriving a greater understanding of words. I’ll briefly go through the major components and vocab you’ll need.

Major Components of Natural Language Processing

1. Tokenization:

Tokenization is the process of breaking a sentence into its distinct terms.

![]()

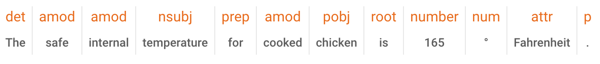

2. Parts-of-Speech Tagging

Parts-of-Speech Tagging classifies words by parts of speech (think sentence diagramming in elementary school).

![]()

3. Lemmatization

Lemmatization determines if a word has different forms, like:

am, are, is = be

car, cars, car’s, cars’ = car

4. Word Dependency

Word dependency creates relationships between the words based on rules of grammar. This process also maps out “hops” between words.

5. Parse Label

Labeling classifies the dependency or the type of relationship between two words connected via a dependency (Standford paper that defines these).

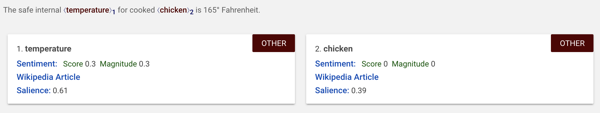

6. Named Entity Extraction

This attempts to identify words with a “known” meaning. Generally, these are people, places, and things (nouns). While pop culture entities tend to come to mind, words like “temperature” and “chicken” are also entities. What Google determines is an entity is expanding as it gains more “knowledge” of the world. Entities can also include product names. These are, generally, the words that trigger a Knowledge Graph. However, Google may know phrases like “title tag” have meaning, even if they aren’t “entities” in the traditional sense.

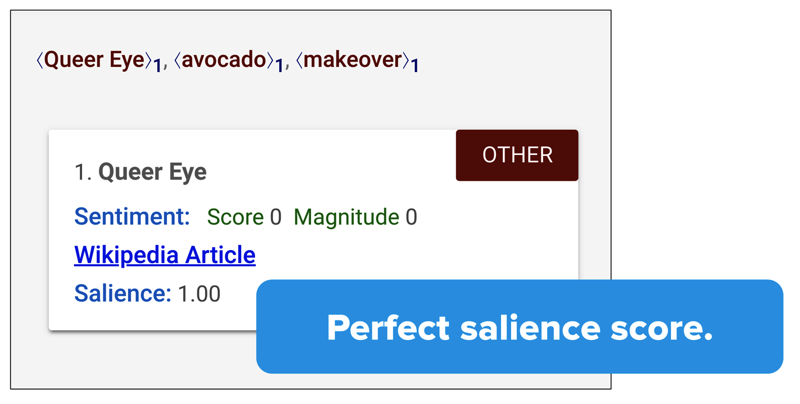

7. Salience

Salience determines how much text is about something. The more salience, or aboutness, the more relevant that text is to the word/topic. This is determined in NLP based on what we call indicator words. Generally, salience is determined by word co-citation across the web and the relationships between entities within databases like Wikipedia and Freebase. Google is also likely to apply the link graph to entity extraction in documents as well to determine these word relationships.

For example, words like “avocado” and “makeover” make a sentence more salient for the show “Queer Eye.”

8. Sentiment

In short, this is a negative to a positive score of the expressed sentiment (view or attitude) about entities in an article.

9. Subject Categorization

At a macro level, NLP will classify text into subject categories, such as the ones you’ll find here.

Subject categorization helps determine broadly what the text is about (and may interact with how topical authority is determined and assigned through the link graph and your body of content).

10. Text Classification & Function

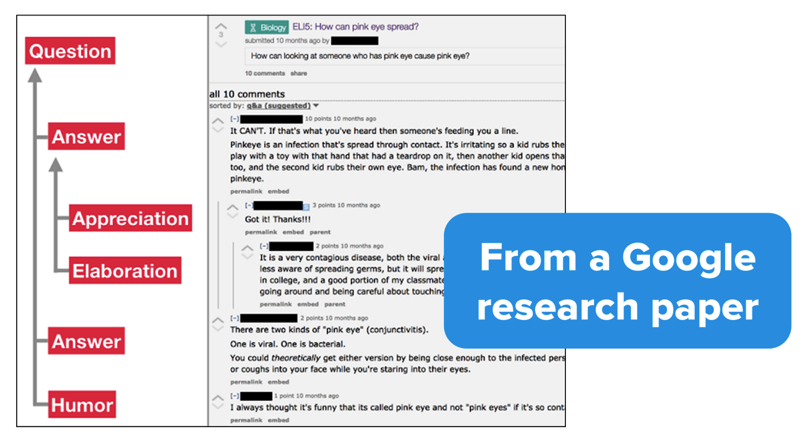

NLP may go further and determine the intended function of the content. In research by Google, there is discussion of classifying UGC by categories like praise, humor, question, and answer(s).

11. Content-Type Extraction

Google can use structural patterns to determine the content type of given text without Schema.org structured data. The HTML, formatting of text and the data type of the text (date, location, URL, etc.) can be used to structure the text without additional markup. This process allows Google to determine if text is an event, recipe, product, or another content type without the use of markup.

12. Structure Implied Meaning

The formatting of a body of text can change its implied meaning. Headings, line breaks, lists, and nearness all convey a secondary understanding of the text. For example, if text appears in an HTML ordered list or a series of headings with numbers in front of them, it’s likely a process or ranking. The structure is not only defined by HTML tags but by visual font size/weight and proximity when rendered.

Units of Understanding

While tools like the free Google NLP tool can allow us to look at this process one sentence at a time, Google is building these relationships with larger chunks of text. At Briggsby, we often say that meaning is determined one sentence at a time, one paragraph at a time, one section at a time, and one page at a time. Effective NLP optimization clarifies meaning at each of these units.

10 Ways to Improve Writing for NLP

There are a handful of practical and actionable changes we can make to our content to better optimize our content for NLP.

1. Connect Questions to Answers

The key to well-optimized content for NLP is simple sentence structure, especially when answering questions. The advice we give our clients is to think about the 1-2 sentence answer you’d expect Google Assistant to provide when asking it a question.

Basic Format: [Entity] is [Answer].

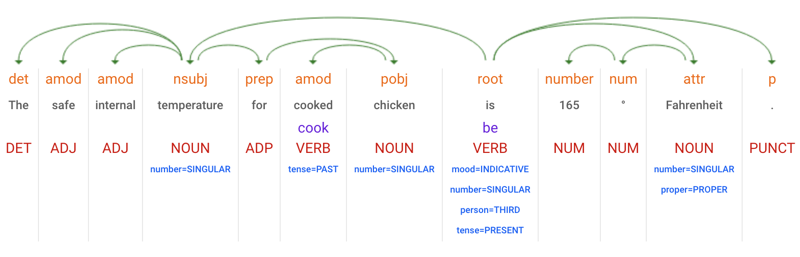

This format reconstructs the question back into a simple statement that includes the answer. If the question is: “safe temperature for chicken,” then the clear answer is “The safe internal temperature for cooked chicken is 165° Fahrenheit.”

2. Identify Units, Classifications, and Adjectives

Within NLP SEO, words have meaning and therefore may have expected units, classifications, or adjectives associated with them. NLP parsing will be on the lookout for these elements when determining if the content contains the precise answer to a question. Let’s look at two examples.

Example Query 1: “Safe Temperature for Chicken”

For this query, temperature has a unit of degrees in either Fahrenheit or Celsius expressed as a numerical value. If a sentence does not include these elements, it does not satisfy the question. A well-structured sentence should contain a number and the word degree or the degree symbol. If our sentence clarifies Fahrenheit or Celsius, the answer is more accurate and specific, while also improving our localized targeting.

Example Query 2: “Title Tag Length”

For this query, Google could identify that “title tag” is a known concept within a subcommunity of web developers and SEOs. The question “length” denotes a dimension that has units, which for the title tag is traditionally characters or more recently (for the SEO niche only) pixels. A number should express both of those units. The top-ranking article, from Moz, satisfies this expectation by answering “Google typically displays the first 50–60 characters of a title tag.”

3. Reduce Dependency Hops

Reading a sentence and determining if a question is answered depends on Google’s NLP parsers not getting hung up as they “crawl” through a sentence. If a sentence’s structure is overly complicated, Google may fail to create clear links between words or may require it to take too many hops to build that relationship.

The goal here is to reduce the total number of hops to get from the subject to the question to the answer.

Example Sentence 1:

“The safe internal temperature for cooked chicken is 165° Fahrenheit.”

Map the journey from chicken to temperature to the answer:

chicken <-> for <-> temperature <-> is <-> Fahrenheit <-> degree <-> 165

We can get from chicken to a numerical value in 6 hops with a clear journey and relationship between the two.

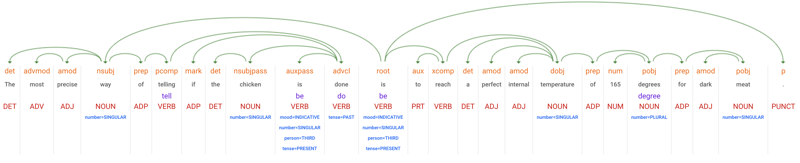

Example Sentence 2:

“The most precise way of telling if the chicken is done is to reach a perfect internal temperature of 165 degrees for dark meat.”

Mapping it out:

chicken <-> done <-> telling <-> of <-> way <-> is <-> reach <-> temperature <-> of <-> degrees <-> 165

This sentence has 10 hops between the noun of the question and the numerical answer. The increased complexity may lower confidence in the answer or get the NLP hung up on understanding a sentence entirely. These types of embellishments are common for writers but can hinder content performance.

To optimize text for NLP, you want to reduce these hops and create a more explicit journey between the question and the answer by using simplified grammar and clear writing.

4. Don’t Beat Around the Bush

A common NLP problem is “beating around the bush” when it comes to answering a question. It’s not that these answered are “wrong,” but they don’t give Google a precise determination of the answer.

Let’s look at the query: “how often to wash hair.”

Example Text 1:

It seems as though we’re all divided when it comes to how often we should be washing our hair. Some people swear that washing less frequently makes their hair healthier, while others insist that double-shampooing is actually the way to go.

…

But how does your haircare routine stack up against the rest of America? A recent survey conducted by Lookfantastic set out to measure just that. It turns out that 49% of women polled admitted to washing their hair every damn day, which means that we, as a nation, are totally split 50/50.

…

If you’re not sure how often you should be washing your hair, it’s always a good idea to check in with your stylist next time you’re at the salon.

Can you find the precise answer in there? How confident is Google that the answer appears in that text?

Example Text 2:

If your skin and hair are anywhere from normal (not super oily and not super dry) to dry, you probably only need to wash it once or twice a week, according to a Columbia University health column. If you have a greasy scalp, you probably need to wash your hair more often.

This second example provides a much clearer answer to the question. Identifying a lack of clarity in content is an important step when auditing content for NLP. If Google is unable to parse a definite answer, their confidence in search task completion and relevance goes down.

5. Avoid Unclear Antecedents

As a quick grammar refresher, a pronoun, such as “she,” “it,” and “they,” can stand in for a noun. The antecedent is the noun that pronoun is meant to represent. When pronouns are introduced, it can become unclear which noun is being referenced.

A classic example is:

The folder was on the bus, but now it’s gone.

Which is gone? Is it the bus or the folder?

The problem can become compounded when NLP has to carry meaning from sentence to sentence. Even if an antecedent is clear when looking at a single sentence, it can become unclear when meaning is carried from sentence to sentence.

Example Text:

Title tags are an essential part of optimizing a page for SEO. On a podcast last week, John suggested they should be less than 60 characters.

It’s possible to consider “they” as a reference to podcasts. The meaning could be “podcast should be less than 60 characters long”, even though this doesn’t make logical sense. As readers, we’re able to make the intellectual leap to know that “they” refers to “title tags,” but Google may fail to make that connection.

As a result of this unclear antecedent, NLP may conclude that this text does not contain the answer to “title tag length.” The best practices listed above should help minimize this but pay particular attention when you’re splitting the question/subject from the answer across two separate sentences.

6. Be “Correct” and Clear

It’s possible to be too smart for Google. Saying that Google can understand text is like saying Google could crawl JavaScript several years ago. Yes, it could, but there were, and still are, a ton of caveats. Understanding those various caveats help us ensure that Google not only can see our content accurately (rendering) but that it can also read and extract meaning from that content. Generally, Google is pretty “dumb” when it comes to reading.

The lack of a distinct answer can get you in trouble when you are “correct,” but are perhaps too complex for Google to determine if you answered a question.

Example Text:

The optimal length for a title is determined by how much Google can show in their search results. The way the results look might differ depending on the device you’re using.

…

If you’re asking “how many characters does Google show?”, the answer is: “it depends.” Google doesn’t count a particular number of characters but has a fixed width in which it can show the title.

…As the optimal title length for SEO differs per type of result, we would suggest getting your most important keyword in the first half of the title.

This answer isn’t incorrect. It’s exceptionally accurate. However, Google’s reading comprehension abilities will struggle to determine if this text answers a question about the ideal title tag length, especially when compared to Moz’s “Google typically displays the first 50–60 characters of a title tag.” The more complicated answer also doesn’t satisfy Google’s goal of simple responses for featured snippets and voice search.

While this simplification will lead to better SERP performance, it can be frustrating when writing about complex, nuanced subjects. There may not be a simple answer, but right now the sites that give the precise answer get a disproportionate advantage due to featured snippets and NLP weighting.

7. Boost Your Salience

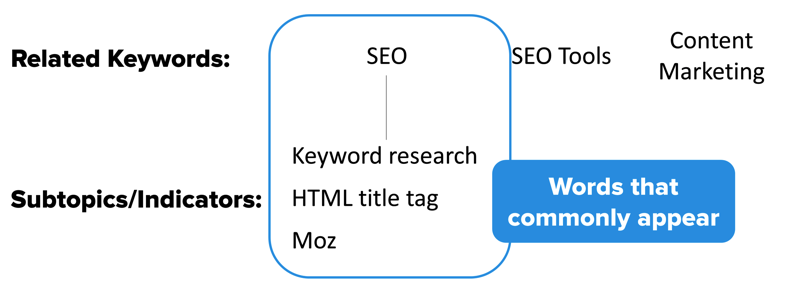

Traditional keyword targeting focuses on keyword placement, phrase/broad match variants, and closely related keywords. As NLP improves, keyword salience becomes more critical than naïve targeting practices that have been standard for 10 years.

Related keywords typically refer to either “people also search” or “page also ranks for” terms, but indicator keywords (as we call them) are the words that commonly appear alongside a keyword and can “build up” to it. The use of these terms improves an article’s “aboutness” for its target keyword.

Using these keywords in your text can improve its salience for a given word – similar to the Queer Eye, avocado, and makeover example earlier.

Subject matter experts tend to identify and use these phrases naturally when writing about a topic (a great reason spend more for better writers). However, this can be improved by intentionally adding a step to your brainstorming process, as well as your internal documentation and training.

Related keyword tools can often fail you at this stage. Let’s look at the related keyword recommendations of popular tools when you put in “title tag.”

| SEMRush | Moz | Google Suggest |

|---|---|---|

| what is the title tag | title tag html | title tag length 2018 |

| keyword is title tag | meta title tag | html title tag |

| what is a title tag | title tag definition | title tag definition |

| title tag seo | meta title example | title tag and meta description |

| page title tag | title tag and meta description | title tag vs h1 |

These tools weigh heavily the “keywords people also search” style of related terms. If you parse these out, there are some indicator keywords, but mostly they’re variants of the target term.

However, a subject matter expert might brainstorm a list such as:

- Search result page, SERP

- HTML, Head

- Length, characters

- Web browser display, bookmark

- Social networks, Facebook, Open Graph, Twitter

- SEO, On-page SEO, keyword targeting

- Meta tags

The goal of including these indicator keywords above is not to target or rank for them, but to bolster the salience or aboutness of the target phrase.

8. Follow the Query Through

An extension of salience is addressing the question, “does this article answer all the subjects and questions a searcher might have when they search?” Google can identify these follow through topics and questions by looking at follow up searches and query refinement within search sessions. Google can improve searcher satisfaction if its able to satisfy searchers sooner by giving them content that eliminates the need for two to three additional searches.

If a user searches for “Emergency Fund,” they may have the following goals on their journey:

- What is an Emergency Fund

- How Much to Save

- Types of Emergency Funds

- How to Build an Emergency Fund

Try to cover these secondary topics in your articles. The depth of subjects covered depends on how broad the initial target keyword is. If the target phrase is a broad, head term, there may be many secondary topics, but if it’s a specific longtail phrase, there may be none. By doing this, you’ll naturally pick up additional indicator keywords that improve salience.

We often train writers to think through the journey of the searcher, relevant vocab terms, what secondary subjects a reader might need to know, and the frequently asked questions. These are included in the outline or brief and should be answered throughout the content. While SEO tools can be handy here, we’ve found that subject matter experts can do this more effectively than SEO tools alone.

9. Disambiguate Entities

Simplify your content around entities you want Google to extract successfully. There are two simple rules here:

- Isolate the entities when not used in a sentence. When an entity is used outside of a sentence, try to isolate it in the text, and within an HTML tag where it appears, such as headings, list items, or table cells. If you’re using a bold as a “list label” or “list summary,” as I’m doing with this paragraph, put the entity in the tag by itself. Avoid grouping it with a price, year, category, parentheses, or any other data/text.

- Use indicator words to disambiguate entities. When an entity can be confused, such as cities in multiple states, movies with the same name, or films vs. books, you can disambiguate entities by using indicator words in the same sentence. For the sentence “Portland is a great place to live,” the extracted entity is Portland, OR. For the sentence, “the Old Port neighborhood in Portland is a great place to live,” the extracted entity is Portland, ME. There is an entity relationship between “Portland (ME)” and “Old Port,” which allows Google to disambiguate the entity “Portland.” Brainstorm these indicator words when your entities could have multiple identities.

10. Use Structure to Convey Meaning

Structure and formatting have semantic meaning. These can be used to convey additional context for NLP understanding.

Here are some general best practices and concepts:

- Inverted Pyramid: Articles have a lede, body, and a tail. Content has different meanings based on how far down the page it appears.

- Headings: Headings define the content between it and the next heading.

- Subtopics: Think of headings as sub-articles within the parent article.

- Proximity: Proximity determines relationships. Words/phrases in the same sentence are closely related. Words/phrases in the same paragraph are related. Words/phrases in different sections are distantly related.

- Relationships: Subheadings have a parent – > child relationship. (A page with a list of categories as H2s and products as H3s is a list of categories. A page with a list of products as H2s and categories as H3s is a list of products.)

- HTML Tags:

- Text doesn’t have to be in a heading tag to be a heading. (However, heading tags are preferred.)

- Text also doesn’t need a heading tag to have a parent -> child relationship. Its display and font formatting can visually dictate this without the use of heading tags. (However, heading tags are preferred.)

- Lists:

- HTML ordered and unordered lists function as lists, which have a meaning.

- Headings can also perform as lists.

- Headings with a number first can work as an ordered list.

- Ordered lists imply rankings, order, or process.

- Short bolded “labels” or “summary” phrases at the start of a paragraph can function as a list.

- Other Formats:

- Tables inherently imply row/column relationships.

- Some formatting suggests classification, like addresses and date formats.

- Structure by Content Type: Some content types have expected data that define them. Events have names, locations, and dates. Products have names, brands, and prices.

How to Implement this in Your Content Program

Google’s advances in NLP require SEOs and content programs interested in driving distribution through organic search to start thinking more about the technical limitations Google has in understanding how we write. We’ve seen success in the last year by implementing these best practices. Currently, there are few tools that help guide this process, but a few tweaks to your process and training can cover a lot of ground.

- Update training material and checklists to include an overview of these ten improvements to writing style. Our training material has a heavy emphasis on featured snippet targeting. The sole goal isn’t featured snippets, but focusing on them can drive broader success. Writing for featured snippets is writing for NLP.

- Give writers featured snippet targets to pursue in each article, which are tracked and celebrated upon acquiring (along with an explanation of why their copy worked so well).

- Place significant emphasis on readability and governance around content templates and formatting guidelines, which force articles into best practices around formatting and structure.

- Introduce an additional stage to keyword research and creative brief development that brainstorms indicator keywords, search journey topics, and question keywords. This process also includes a step of researching the topics covered by the top 5 ranking articles for the keyword.

- Create a short-term iterative process of SEO QA on articles before publication, which provides feedback to writers/editors on their first few articles after training.

If you have any comments or questions, hit me up on Twitter or leave a comment below.

Justin:

I loved this article. I have recently become fascinated with this area of SEO. I think we often ramble too much with SEO writing, because we have been told that an article has to be 1,000 words to get noticed by Google. I never thought I would be looking at sentence mapping again. That was way back in the journalism school days.

PS. You might get a second pair of eyes to read your stuff. I’m as a guilty as anyone, but I saw a couple of typos.

Thanks! Sadly, I ran it through Grammarly and had another person proof/edit. I still found a few typos after I hit publish and fixed this morning. I’m sure there are still some in there hiding from me.

No worries. I’ve signed up for a course on Udemy to learn a little Python and NDP. This is the future whether we like it or not.

Love following your stuff Justin – definitely will help to point our writers here in the future.

I always struggle when it comes to question queries. More specifically, whether a question should be answered on a dedicated, single page (where the question and answer are obvious and clear) or whether the question should be featured as part of a faq section on a broad topic page.

How do you guys see it? Would you roll out 50 individual pages aimed at one questions if each had search volume?

Thanks for the work you are doing

Thank you!

This one is always tough and is something we get asked about a lot. Typically, we’ll look at the SERPs to decide which approach to take. Often, there may not be a right answer.

For example, if we look at “emergency fund,” there could be two potential questions:

1) Why do I need an emergency fund?

Specific articles that directly target query rank well.

2) How do I build an emergency fund?

While there are a lot of specific articles, NerdWallet ranks well with a broad topic page, which can happen when you have a lot of authority (topical and links).

When looking at specific questions, it can vary:

1) bike size for 7 year old

Broad guides outperform specific answers. However, this may simply be a content gap. If a large, authoritative brand in this space were to create a simple guide for every year + gender + adult/kid combination, it might be able to quickly grab 10ks of visits fairly easily.

2) income tax in mn

Specific answers outperform broad guides.

3) 650 credit score

Specific answers outperform broad guides.

You can also identify areas where specific articles work well when Google is “reaching” for a good result by ranking UGC sites like forums and Q&A.

However, sometimes there is mixed intent. For example, “how to condition hair” has two intents, how to condition and how to wash your hair overall. They rank a few articles for one intent and then a few articles for the broader intent (even though they don’t keyword match). In situations like that, we have to pick which approach we’re going to take or do both to see which one sticks.

One method we’ve used is to write a broad, robust article first while trying to cover several aspects of the topic. We wait for it to start ranking well, then mine Google Search Console for the keywords where we’re 6 through 15. These are typically good candidates for longtail, specific follow-up posts.

I think it also varies based on a site’s penetration in a market and its authority. If it’s an authoritative site, I might focus on the broad articles first and then work out to more specific terms as we hit diminishing returns. However, a small or newer site would be wasting resources if they have no chance of ranking for a competitive term, so medium to more specific topics might get more traction early on.

Another way we think about it is building a “body of content.” Google seems to give sites more topical authority in a subject area based on the volume of content that we have on that subject. If we see evidence that we might have a topical authority gap, we’ll flesh out more specific articles not just to rank but to pad out the “body of content” we have on that subject.

Thanks for the epic reply Justin.

Like the idea of building a robust piece and then using search console to determine which topics to build out .

I tend to use the actual serps to see what is ranking to help guide my thoughts. The ahref parent theme feature has helped here too.

Ive also used intitle to see if specific content does exist to help detsrmin the route to take, to identify if specific content CAN rank.

Again, thanks a tonne Justin.

This is great Justin. It’s always refreshing to read your content and makes me think I am doing something right.

I am big on content SEO and topical relevance (publishing lots of similar content on the same subdirectory/site). When I hire writers I ask them to read Moz’s guide to beginner SEO and explain to them how Google parses content, how they should construct sentences, how they could write better by answering more questions and to ensure they write in a way that 12-year-olds will understand. My team has run many blogs and our most successful blog had these lessons codified from the beginning.

The lessons here just put theory to what I knew in practice and I will most definitely be adding this blog post to their training material. Right now, I am focused on tripling that blog’s traffic through content audits and adding new content, and I am sharing my progress with 200 persons in a WhatsApp group. I will definitely be sharing this post there too.

Since we are on the topic, I wonder if this way of creating SEO content will be spammed and Google will release an update to demote pages that are heavily optimized for Google’s NLP.

I will be starting a large content site (500K+ pages) from scratch (no backlinks) in a highly competitive niche with lots of evergreen content. All content will be structured content, do you have any tips for me 🙂

Thank you! 🙂

The SEO industry always finds a way to spam new opportunities until Google has to create a new update to demote them. I could see that coming in terms of spammers using NLP or ML to autogenerate content or getting too granular with their content while trying to target Featured Snippets (like old pre-Penguin days).

Also, good luck on the new large content site. Some advice is:

1) Make sure it’s relatively “flat” so most content is discovered in the first few levels (click-depth). That’s a lot of content, so click-depth and equity distribution is important. Also focus on “deep links” to articles.

2) Focus early on “topical authority” by building out hub & spoke style content around specific niches or keyword groups, instead of spreading out too thin. Strategic cross-linking within a niche can be very powerful.

3) With that much content, you’re going to need a lot of link equity. Resources, tools, data, and studies are a great way to acquiring a lot of backlinks quickly (this is always really hard).

4) Find keywords where you might have “leverage.” This could be opportunities where you can do it better, faster, and with a better match to searcher intent. What can you do faster and with a more differentiated approach than others.

5) Focus on Featured Snippets on keywords where you can rank in top 5. Featured Snippets tend to get pulled from top results.

Also, I’d recommend studying sites like NerdWallet and ValuePenguin. They’ve built really impressive programs in the last few years that grew “fast.”

NW’s Debt Study is a great example of mixing other people’s data with your own survey data to build a really great link target:

https://www.nerdwallet.com/blog/average-credit-card-debt-household/

NW creates great high quality tools:

https://www.nerdwallet.com/mortgages/how-much-house-can-i-afford/calculate-affordability

Research how they do their sidebars on these two templates to build hub & spoke clusters of content:

https://www.nerdwallet.com/blog/mortgages/homebuying-process-expectations/

https://www.nerdwallet.com/article/tips-for-first-time-home-buyers

Value Penguin did a great job of finding a early content type / niche, which was Averages:

https://www.valuepenguin.com/average-cost-of-homeowners-insurance

https://www.valuepenguin.com/average-credit-card-debt

https://www.valuepenguin.com/average-credit-card-interest-rates

https://www.valuepenguin.com/average-cost-vacation

These not only align to search volume, but are really great references for journalists writing articles. This helped them scale link acquisition fairly fast. They hit that content approach very hard and did outreach to journalist/bloggers. They created embeddable charts/tables of data and customized images for outreach. There was a lot of clever and integrated promotion strategy.

Whoa, thank you for the great article!

Sometimes we are not putting much effort on writing, just writing a lot of word, thinking Google might not read them well 🙂

NLP is another level of Content creation

Hi,

why do you recommend to

“Avoid grouping it [entity] with a price, year, category, parentheses, or any other data/text.”

Which is the better title tag / H1:

A. 20 best restaurants in Los Angeles in 2018

B. 20 best restaurants in Los Angeles

C. Best restaurants in Los Angeles

Thx

PS Great article!

Thanks!

I think any of these will work. Sites appear to be ranking with and without the numbers.

It’s ok to include the year in the H1 or title.

What I mean was not doing this:

H2 Best BBQ

H3 Restaurant 1

H2 Best Sushi

H3 Restaurant 2

H2 Best Italian

H3 Restaurant 3

This is a list of food categories instead of a list of restaurants. The names of the restaurants are sub points under the categories. It’s harder to extract as a Featured Snippet.

And not doing this:

H2 Restaurant 1 (Best BBQ)

H2 Restaurant 2 (Best Sushi)

H2 Restaurant 3 (Best Italian)

This approach doesn’t disambiguate the restaurant name as well, which can reduce Google’s confidence that this post is a list of restaurants. It’s harder to extract as a Featured Snippet.

Instead, do something like this:

H2 Restaurant 1

H3 Best BBQ Restaurant

H2 Restaurant 2

H3 Best Sushi Restaurant

H2 Restaurant 3

H3 Best Italian Restaurant

This change makes it a list of restaurants instead. Google could pull the first 5-7 H2 from the post and have a list for Featured Snippets.

For H1/Titles:

It may be worth testing the titles to see if one gives you a better CTR vs. the other. It may also be worth testing an round, even number like 20 vs. an odd prime number like 17 to see which might resonate better.

Using the year in the title could give you a slight edge if you’re struggling to rank for the general term. It may be easier to rank on the specific year keyword faster than the general term. Several of the sites that rank update their rankings monthly or by season. If you go the annual approach, use the same URL each year and just update the content for that year/season/month while leaving the URL the same. This prevents any equity loss or the need to 301 redirect.

Superb article, Justin. SEOs would be wise to study on this information very carefully!

very pleasantly suprised, good read. bloody good job!

Justin,

I found you thanks to AJ Kohn and I have to say that this blog is really packed of great insights! It’s hard to not to fall in love with NLP after having read this article!

I’m having troubles to fit my (very basic) notions in this field (NLP) in the design of the site structure. I went to check Google NPL API and found very interesting the content categories. I’d be interested to know what are your thoughts on the use of this function to create the basic structure of a web site.

Cheers

Wow, looks like I’m late to the party 🙂 Awesome stuff, Justin!

Quick q, regarding salience, are there any tools that you’ve found useful in researching related keywords? There seem to be products specifically designed for this purpose, such as Clearscope and MarketMuse, though they tend to come at a premium (at least compared to your average non-enterprise SEO tool).

Sorry for the late reply here. We haven’t found any good ones that I can recommend. Most common SEO tools focus on “also searched” or phrase match type related words. With our clients, we focus on subject matter expertise, research, and brainstorming. It doesn’t scale very well but has been successful.

One of the best articles I read recently. It was my missing piece in Featured Snippets optimization!